OpenAI, a leading pioneer in artificial intelligence, has unveiled GPT-4o mini, a revolutionary addition to its lineup of powerful language models. Designed with accessibility in mind, GPT-4o mini aims to democratize artificial intelligence by offering cutting-edge performance at a fraction of the cost of its predecessors. This breakthrough model boasts impressive capabilities in text and image processing, with future enhancements promising to expand its repertoire to include audio and video processing as well.

With GPT-4o mini, OpenAI is empowering businesses, developers, and individual users to harness the potential of AI for a wide array of tasks, from content generation and translation to data analysis and customer service automation. Whether you’re a seasoned AI practitioner or just starting your journey into this exciting field, GPT-4o mini offers a compelling blend of affordability, performance, and versatility.

Unleashing Affordable AI Power

One of the most striking aspects of GPT-4o mini is its remarkable affordability. OpenAI has made significant strides in optimizing its models, resulting in a pricing structure that’s considerably more budget-friendly than previous offerings. In fact, GPT-4o mini is more than 60% cheaper than its predecessor, GPT-3.5 Turbo, making it an attractive option for businesses of all sizes and individual users alike.

GPT-4o mini’s affordability represented by a stack of glowing coins

Pricing Details:

- Input Tokens: $0.15 per million tokens

- Output Tokens: $0.60 per million tokens

This cost-effective pricing model opens up a world of possibilities for AI integration into everyday workflows. From small startups to large enterprises, organizations can now leverage the power of GPT-4o mini without breaking the bank.

Performance That Surpasses Expectations

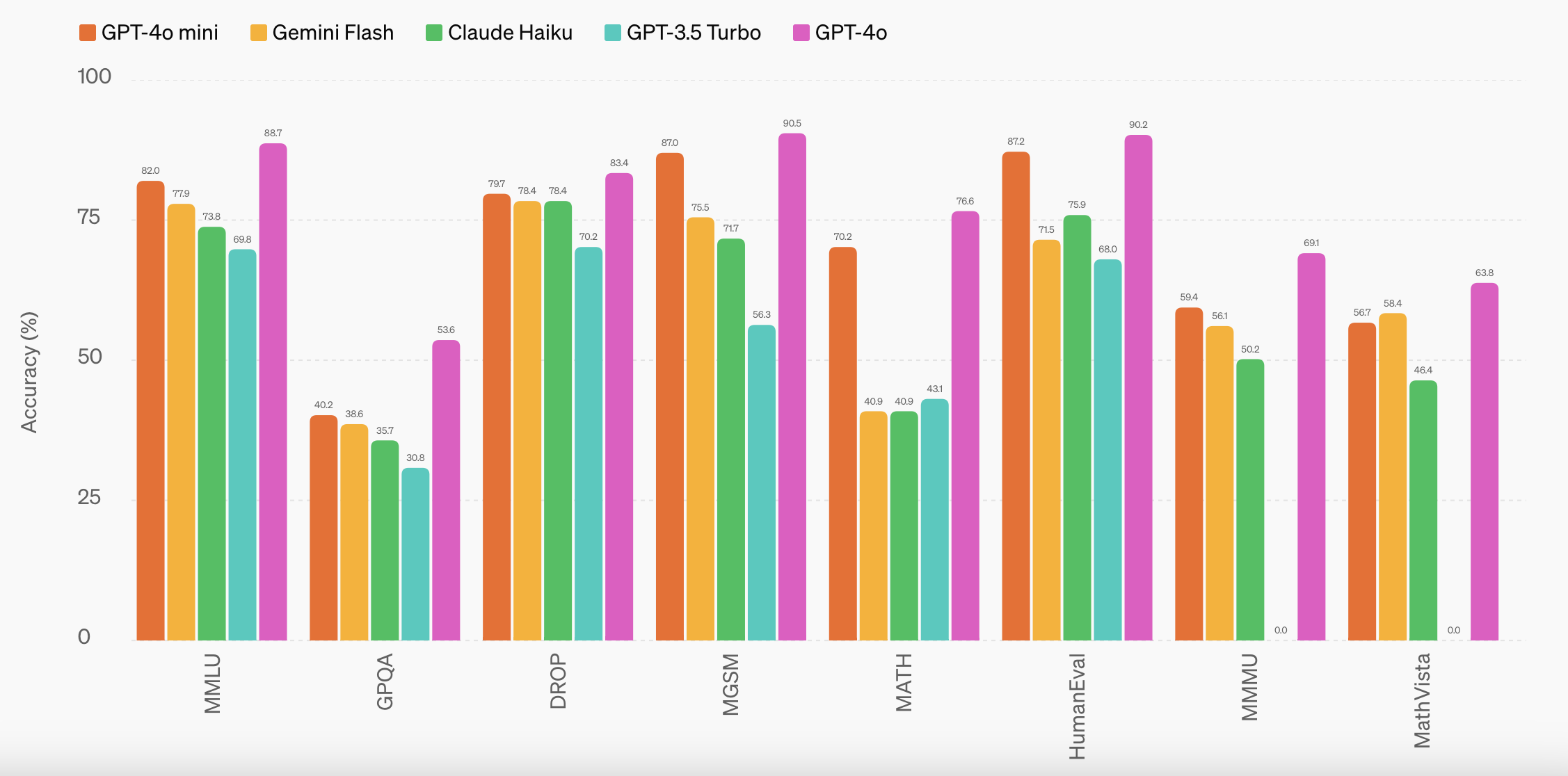

GPT-4o mini doesn’t just boast affordability; it also delivers exceptional performance, outshining its predecessors and competing models in various benchmarks. OpenAI has meticulously evaluated GPT-4o mini across a range of academic tasks, demonstrating its prowess in both textual intelligence and multimodal reasoning.

Performance comparison chart of GPT-4o mini with other AI models on benchmarks.

Benchmark Victories: A Testament to GPT-4o Mini’s Capabilities

- MMLU (Massive Multitask Language Understanding): GPT-4o mini achieved an impressive score of 82.0% on MMLU, surpassing other small models like Gemini Flash (77.9%) and Claude Haiku (73.8%). This demonstrates its superior understanding and reasoning abilities across diverse textual tasks.

- MGSM (Math Grade School Math): In mathematical reasoning, GPT-4o mini scored 87.0%, leaving behind Gemini Flash (75.5%) and Claude Haiku (71.7%). This showcases its potential for applications requiring numerical problem-solving.

- HumanEval (Coding Proficiency): GPT-4o mini’s coding prowess shines through with a score of 87.2% on HumanEval, outperforming Gemini Flash (71.5%) and Claude Haiku (75.9%). This makes it a valuable tool for developers and programmers.

- MMMU (Multimodal Multitask Language Understanding): GPT-4o mini also excels in multimodal reasoning, achieving a score of 59.4% on MMMU, compared to 56.1% for Gemini Flash and 50.2% for Claude Haiku. This capability is crucial for tasks that involve understanding and generating content based on both text and images.

These benchmark results solidify GPT-4o mini’s position as a top performer in the realm of small language models. Its ability to understand complex language, reason effectively, and tackle mathematical and coding challenges makes it a versatile tool for a wide array of applications.

Multimodal Capabilities: Beyond Just Text

GPT-4o mini is not limited to textual understanding. OpenAI has equipped it with the ability to process and understand visual information as well. This opens up a new realm of possibilities for applications that require both text and image comprehension.

GPT-4o mini processing text and images, with future support for audio and video.

Seeing the World: GPT-4o Mini’s Visual Processing

Currently, GPT-4o mini can accept both text and images as input through OpenAI’s API. This means it can analyze and generate content based on a combination of textual descriptions and visual cues. For example, you could ask GPT-4o mini to describe a picture, generate captions for images, or even answer questions about the content of a photograph.

The Future of Multimodal AI: Audio and Video on the Horizon

OpenAI is not stopping at text and images. The company has ambitious plans to expand GPT-4o mini’s capabilities to include audio and video processing in the near future. This will make it an even more powerful tool for content creators, educators, researchers, and businesses across various industries.

Imagine being able to ask GPT-4o mini to summarize a video, generate transcripts of audio recordings, or even create personalized video recommendations based on your preferences. These are just a few examples of the exciting possibilities that lie ahead as GPT-4o mini evolves into a fully multimodal AI model.

Real-World Applications: GPT-4o Mini in Action

GPT-4o mini’s versatility shines in real-world scenarios, where it has proven its mettle in tackling diverse tasks across various industries. Early adopters have already integrated GPT-4o mini into their workflows, reaping the benefits of its powerful capabilities and cost-efficiency.

People using GPT-4o mini in various scenarios for different tasks.

Streamlining Operations with GPT-4o Mini

- Ramp: This financial automation platform successfully utilized GPT-4o mini to extract structured data from receipt files, showcasing its potential for automating tedious manual tasks and enhancing efficiency in financial management.

- Superhuman: This email client leveraged GPT-4o mini to generate high-quality email responses based on conversation history. This exemplifies the model’s ability to understand context and produce relevant, personalized content, saving users time and effort in communication.

These are just a few examples of how GPT-4o mini is already making an impact in the real world. Its ability to analyze text, images, and (soon) audio and video opens up a plethora of opportunities for businesses and individuals to streamline operations, enhance customer experiences, and unlock new levels of productivity.

As more organizations explore the potential of GPT-4o mini, we can expect to see even more innovative and transformative applications emerge. This model is not just a technological advancement; it’s a catalyst for change, enabling a future where AI seamlessly integrates into our daily lives, making tasks easier, communication smoother, and information more accessible.

Safety First: Building Trust in AI

OpenAI recognizes the importance of building AI systems that are not only powerful but also safe and reliable. GPT-4o mini is no exception. It has been developed with a strong emphasis on safety, incorporating multiple layers of protection to minimize potential risks and ensure responsible AI use.

Shield with GPT-4o mini logo, representing its built-in safety features.

Built-in Mitigations: A Proactive Approach to Safety

From the earliest stages of development, OpenAI has integrated safety measures into GPT-4o mini. These mitigations include:

- Pre-training Filtering: The model is trained on a dataset that has been carefully filtered to exclude harmful content such as hate speech, adult material, and misinformation. This helps to prevent the model from learning or generating inappropriate responses.

- Reinforcement Learning with Human Feedback (RLHF): OpenAI employs RLHF to fine-tune the model’s behavior, aligning it with human preferences and values. This iterative process involves human trainers providing feedback on the model’s responses, helping it to become more accurate, reliable, and safe.

Instruction Hierarchy: A Novel Defense Against Misuse

GPT-4o mini is the first model to implement OpenAI’s innovative “instruction hierarchy” method. This technique strengthens the model’s ability to resist jailbreaks, prompt injections, and system prompt extractions. By making it more difficult for malicious actors to manipulate the model, this approach enhances its overall security and trustworthiness.

Expert Evaluations and Ongoing Monitoring

OpenAI has engaged over 70 external experts in fields like social psychology and misinformation to evaluate GPT-4o and identify potential risks. The insights gained from these evaluations have been instrumental in improving the safety of both GPT-4o and GPT-4o mini. Additionally, OpenAI continues to monitor the model’s usage in real-world applications, adapting and refining its safety measures as new challenges arise.

By prioritizing safety from the outset and employing a multi-layered approach, OpenAI is building trust in AI technology. GPT-4o mini stands as a testament to this commitment, demonstrating that powerful AI can be harnessed responsibly for the benefit of society.

Technical Specifications: Under the Hood of GPT-4o Mini

To fully appreciate GPT-4o mini’s capabilities, it’s important to understand its technical underpinnings. These specifications provide insights into how the model processes information and what it can achieve, drawing inspiration from its predecessor, GPT-4o.

Diagram illustrating GPT-4o mini’s technical specifications.

Context Window: A Broader View of Information

GPT-4o mini boasts a generous context window of 128,000 tokens. In practical terms, this means it can “remember” and consider a vast amount of text when generating responses. This is equivalent to roughly 2500 pages of a standard book, enabling the model to maintain coherence and relevance even in lengthy conversations or when analyzing extensive documents.

Output Tokens: Flexible Response Length

The model is capable of producing up to 16,000 output tokens per request. This provides flexibility in generating responses of varying lengths, from concise summaries to detailed explanations.

Knowledge Cut-Off: Up-to-Date Information

GPT-4o mini’s knowledge base is current up to October 2023. This ensures that the model has access to relatively recent information, making it a valuable tool for tasks that require up-to-date knowledge.

Enhanced Multilingual Support: Breaking Language Barriers

Thanks to the improved tokenizer shared with GPT-4o, GPT-4o mini excels at handling non-English text. This is a significant advantage for users and developers working with multilingual content, as it ensures more accurate and nuanced understanding across a wider range of languages.

By understanding these technical specifications, users can make informed decisions about how to best utilize GPT-4o mini for their specific needs. Whether it’s analyzing large documents, generating creative content, or engaging in multilingual conversations, GPT-4o mini offers the technical capabilities to deliver impressive results.

Availability and Future Developments: Embracing the Evolution of GPT-4o Mini

OpenAI is dedicated to making GPT-4o mini accessible to a wide range of users and developers. The model is currently available through several channels, with exciting developments on the horizon to further enhance its capabilities.

Road towards future developments of GPT-4o mini, including API integration, Chat use, and fine-tuning.

API Access: Integrating GPT-4o Mini into Your Applications

Developers can readily integrate GPT-4o mini into their applications and services through OpenAI’s API (Application Programming Interface). This allows for seamless integration of the model’s text and image processing capabilities into various software solutions, including chatbots, content creation tools, data analysis platforms, and more.

Chat Integration: Empowering Everyday Users

OpenAI has also made GPT-4o mini available within Chat, its popular conversational AI platform. Free, Plus, and Team users can now leverage the power of GPT-4o mini directly within their Chat interactions. This accessibility democratizes AI, enabling individuals to benefit from the model’s capabilities in their daily communication and creative endeavors.

Fine-Tuning: Tailoring GPT-4o Mini to Your Needs

In a forthcoming update, OpenAI plans to introduce the ability to fine-tune GPT-4o mini. This highly anticipated feature will allow users to customize the model’s behavior for specific tasks and domains. Fine-tuning can significantly enhance the model’s performance in niche applications, making it even more versatile and adaptable to diverse use cases.

The Path Forward: Continuous Improvement and Innovation

OpenAI’s commitment to advancing AI doesn’t end with the release of GPT-4o mini. The company is actively working on further enhancements, including:

- Expanding Multimodal Capabilities: Support for audio and video inputs is in the pipeline, opening up new possibilities for content creation, analysis, and interaction.

- Enhanced Safety Measures: OpenAI remains dedicated to refining the model’s safety features, ensuring responsible and ethical AI use.

- Additional Features and Integrations: The company is constantly exploring new ways to improve the user experience and expand the model’s potential applications.

The future of GPT-4o mini is bright, and its evolution promises to redefine the landscape of affordable, accessible, and powerful AI.

Conclusion: GPT-4o Mini – Democratizing AI for All

GPT-4o mini stands as a testament to OpenAI’s commitment to making artificial intelligence accessible, affordable, and impactful for everyone. Its remarkable blend of power, versatility, and cost-efficiency has the potential to revolutionize how businesses and individuals interact with AI.

Whether you’re a developer seeking to build innovative applications, a content creator looking for inspiration, or a business owner aiming to streamline operations, GPT-4o mini offers a powerful toolset to achieve your goals. Its multimodal capabilities, exceptional performance, and unwavering commitment to safety make it a compelling choice for anyone looking to harness the potential of AI.

As GPT-4o mini continues to evolve with new features and enhancements, it promises to usher in a new era of AI democratization, where the benefits of artificial intelligence are no longer confined to a select few, but are accessible to all. Embracing GPT-4o mini is not just about adopting a new technology; it’s about embracing a future where AI empowers us to achieve more, connect better, and explore new frontiers of creativity and innovation.